LLMs — Model Architectures and Pre-Training Objectives

TL;DR: In the realm of AI, the transformer architecture is a game-changer, reshaping language understanding and generation. From “Attention Is All You Need,” it’s transformed NLP and more. Now, exploring deeper, we discover a versatile framework spawning specialized models, each addressing unique challenges.

In the previous article “LLMs — A Brief Introduction” we ventured into Language Models, their importance, transformative Transformers, architecture types, and real-world applications. In this article, we will take a closer look at the heart of these models — their architectures. Furthermore, we will delve into the comprehensive understanding of distinct training objectives that complement each architecture.

Let’s start the ride 🎢

Photo by Priscilla Du Preez on Unsplash

At its core, the transformer architecture departs from the conventional sequential processing approach. Instead of relying on recurrent connections that process data one step at a time, transformers harness the power of parallelism and attention mechanisms to grasp intricate relationships within sequences.

This groundbreaking innovation has paved the way for an expansive array of transformer-based models, each meticulously designed to excel in specific tasks and domains. Among these models, three distinct archetypes stand out:

Encoder Only Models

Decoder Only Models

Symbiotic Encoder-Decoder Models

These architectural variations epitomize the adaptability and versatility that the transformer framework offers, catering to a broad spectrum of AI applications and challenges.

Let’s embark on a comprehensive exploration of each architectural variation, delving into the strengths and weaknesses that characterize each unique approach.

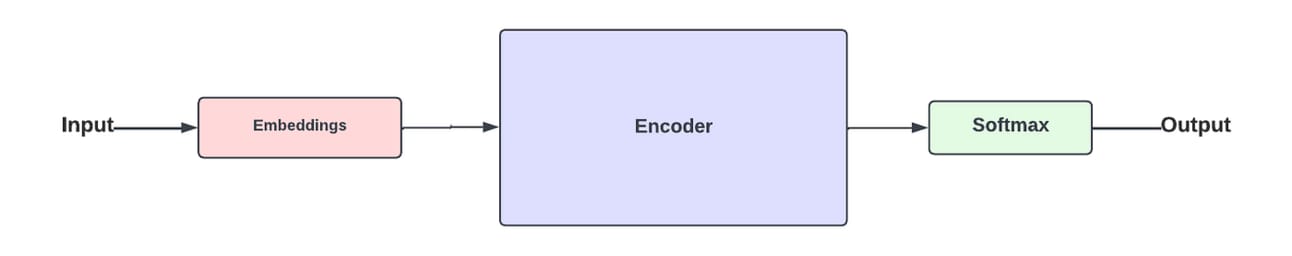

Encoder Only Models

Encoder-Only models, as the name suggests, primarily focus on the encoding phase of the transformer architecture. This means they excel at comprehending context and extracting meaningful features from the input data. In the encoding process, these models process the input sequence, be it text, images, or any other form of data, and create a rich representation of it in the form of hidden states. Prominent architectural examples include BERT (Bidirectional Encoder Representations from Transformer), AlBERT, RoBERT, and GPT-2 (Generative Pre-trained Transformer 2).

Encoder-Only Model

Benefits

Captures intricate relationships within input data.

Produces high-quality feature vectors that can be used for various downstream tasks.

Efficiently handles sequential data.

Limitation

Limited to one-way information flow.

Lacks inherent generative capabilities.

Applications and Use Cases

Text Classification: Encoder-Only models excel at classifying text into predefined categories, making them well-suited for sentiment analysis, topic classification, and spam detection.

Named Entity Recognition (NER): Identifying and categorizing entities (such as names of people, organizations, locations, etc.) within the text.

Feature Extraction: Extracting meaningful features from text or other forms of data that can be used for downstream tasks like clustering or classification.

Language Understanding: Capturing contextual relationships within the text, aiding in understanding the meaning of the input.

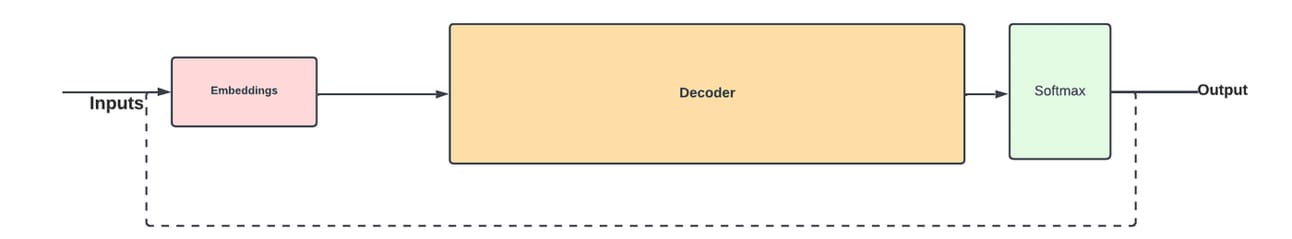

Decoder only Models

Contrary to Encoder-Only models, Decoder-Only models specialize in generating sequences based on a given context or prompt. These models are capable of taking a set of latent representations or tokens and sequentially generating a new sequence. These architectures are ideal for tasks requiring creative output and content-based generation like language generation, machine translation, and text summarization. Prominent examples include GPT-3 (Generative Pre-trained Transformer 3), ChatGPT and T5 (Text-to-Text Transfer Transformer).

Decoder-Only Model

Benefits

Enables creativity and imagination in text generation or completion of tasks.

Supports various conditional generation tasks

Facilitates language translation and summarization

Limitation

May struggle with capturing intricate relationships within input data.

Prone to generating plausible-sounding but incorrect or nonsensical outputs.

Applications and Use Cases

Language Generation: Decoder-Only models are proficient in generating coherent and contextually relevant text, making them ideal for tasks like story generation, creative writing, and dialogue systems.

Machine Translation: Converting text from one language to another while preserving meaning and context.

Text Summarization: Condensing longer text into shorter summaries while retaining key information.

Text Completion: Predicting and generating the next words in a sentence or text based on a given context.

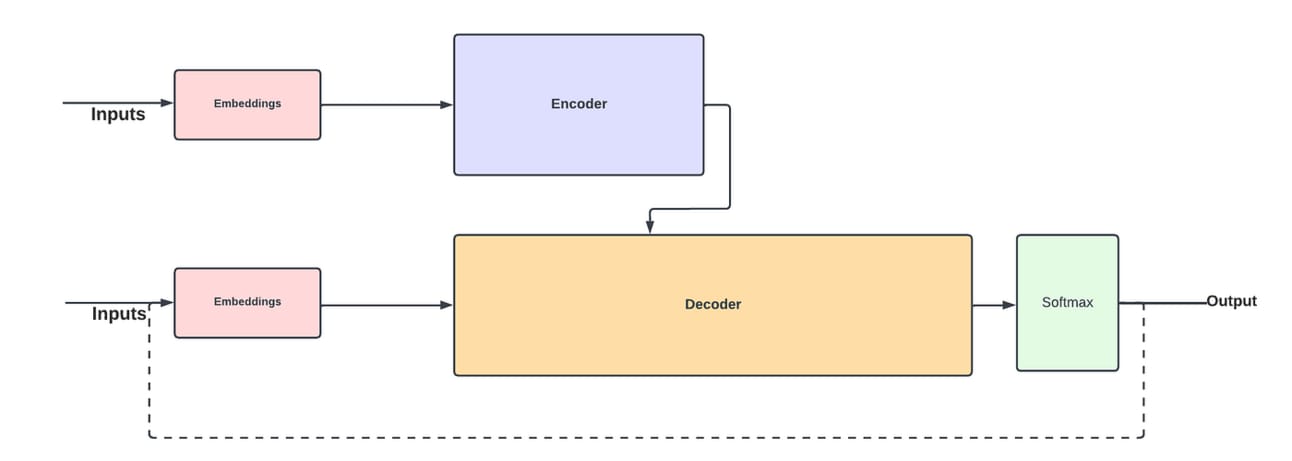

Encoder and Decoder Models

Encoder-Decoder models seamlessly integrate the strengths of both Encoder-Only and Decoder-Only architectures. This fusion provides seamless integration of feature extraction and sequence generation which enables them to handle complex tasks like machine translation, text-to-speech synthesis, and image captioning. These models are tailored to tasks demanding input-output alignment and transformation. The encoder processes input data to create a meaningful context, and the decoder generates the desired output sequence based on that context. Well-known architectures include the Transformer-based Seq2Seq (Sequence-to-Sequence) models.

Encoder-Decoder Model

Benefits

Tackles tasks involving sequence-to-sequence mapping effectively.

Accommodates a wide range of applications, from translation to image captioning.

Leverages the power of both context comprehension and creative generation.

Limitation

Complexity in training and optimization due to the dual nature of the architecture.

Requires huge data in the training process.

Requires careful tuning and handling of the encoder-decoder interaction.

Applications and Use Cases

Machine Translation: Encoder-Decoder models are well-suited for translation tasks as they can encode the source language and then decode it into the target language.

Text-to-Speech Synthesis: Converting text into spoken audio, where the encoder processes the text and the decoder generates the corresponding speech.

Image Captioning: Encoding the visual features of an image and generating a descriptive caption for it.

Question-Answering: Taking a question as input, encoding it, and generating a relevant answer as output.

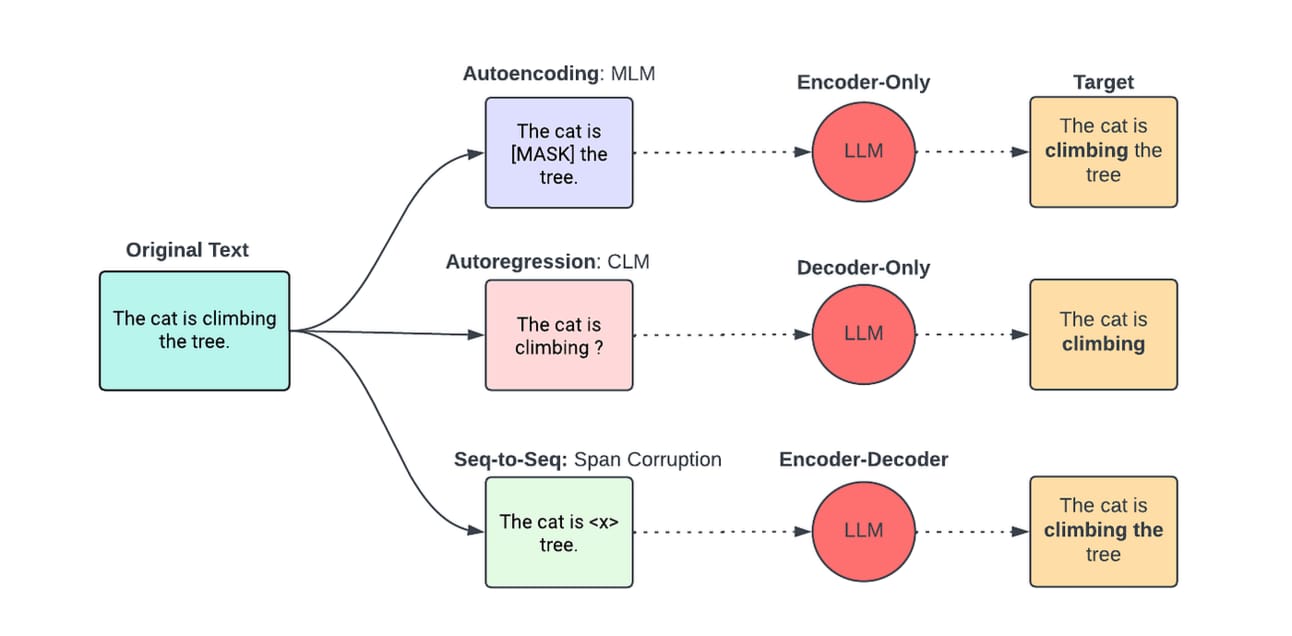

Pre-Training Objectives

The pre-training objective refers to the specific task or goal that a language model aims to achieve during its initial training phase. This phase is crucial in building a strong foundation for the model’s understanding of language and its ability to generate coherent and contextually relevant text.

In the context of pre-training large language models like Transformers, there are several objectives commonly used to guide the learning process.

Encoder Only Models

Encoder-Only models are trained using various techniques that enhance their ability to understand the context and extract meaningful features from input data. Let’s explore the distinct training styles commonly employed for Encoder-Only models:

Masked Language Modeling (MLM)

MLM is a prevalent training approach used in Encoder-Only models like BERT. During training, a portion of the input tokens is randomly masked, and the model is tasked with predicting the masked tokens based on the surrounding context. This encourages the model to grasp contextual relationships in both directions and aids in capturing bidirectional information flow. MLM fosters a comprehensive understanding of the input data’s structure and semantics.Span Corruption and Reconstruction

Another training technique involves introducing span corruption, where segments of the input data are purposefully masked or altered. The model is then trained to reconstruct the original uncorrupted sequence. This approach helps the model learn robust representations by focusing on reconstructing meaningful segments, making it more resilient to noise and variations in the data.Intra-Sentence and Inter-Sentence Relationships

Encoder-Only models can be trained to capture relationships between sentences within the same document (intra-sentence) and across different documents (inter-sentence). By learning to understand both short-range and long-range dependencies, the model becomes adept at contextual comprehension across various scales.

Decoder Only Models

Casual Language Modeling (CLM)

CLM is a prominent training approach utilized in Decoder-Only models like GPT-3. During CLM training, the model is presented with a sequence of tokens and learns to predict the next token in the sequence based on the preceding tokens. This encourages the model to capture language patterns, grammatical structures, and semantic relationships. CLM fosters creative text generation and enables the model to produce contextually coherent outputs.Autoregressive Training

Autoregressive training involves predicting each token in the sequence one at a time, conditioned on the tokens generated before it. This training style aligns well with the sequential nature of text generation tasks and encourages the model to learn the dependencies and ordering of tokens within the sequence.Nucleus Sampling (Top-k Sampling)

Decoder-Only models can be trained using nucleus sampling, where the model selects from the top-k most likely tokens at each generation step. This approach introduces randomness while maintaining control over the diversity of generated sequences. Nucleus sampling can lead to more varied and engaging outputs.Prompt Engineering and Conditioning

During training, Decoder-Only models can be conditioned on specific prompts or context, guiding their generation process toward desired themes or topics. This allows researchers to fine-tune the model’s output and generate content aligned with specific requirements.

Encoder-Decoder Models

Span Corruption and Alignment

Encoder-Decoder models can benefit from a combination of span corruption and alignment techniques. Span corruption involves masking or altering segments of the input data and training the decoder to reconstruct the original sequence. Alignment techniques encourage accurate transformation by aligning input and output sequences during training, ensuring the model learns to produce meaningful outputs based on the provided context.Sequence-to-Sequence Mapping: The fundamental training approach for Encoder-Decoder models involves mapping an input sequence to an output sequence. During training, the encoder processes the input sequence to create a context representation, which is then used by the decoder to generate the corresponding output sequence. This approach is foundational for tasks like machine translation, where the input and output sequences have a clear alignment.

Copy Mechanisms and Attention Mechanisms

Copy mechanisms allow Encoder-Decoder models to directly copy tokens from the input sequence to the output sequence, enhancing their ability to retain important information. Attention mechanisms, such as self-attention and cross-attention, help the model focus on relevant parts of the input during encoding and decoding, contributing to more accurate and contextually appropriate generation.Teacher Forcing and Scheduled Sampling

During training, Encoder-Decoder models can be exposed to a phenomenon known as “exposure bias,” where the model’s input during training differs from its input during inference. Teacher forcing involves using the true previous token during decoding, while scheduled sampling gradually transitions to using the model’s own predictions. These techniques address exposure bias and help the model generate more consistent and coherent sequences.

Conclusion

In this comprehensive exploration, we delved into the intricacies of three distinct LLM architectures: Encoder-Only Models, Decoder-Only Models, and Encoder-Decoder Models. Each architecture plays a unique role in the realm of data science and natural language processing, catering to specific tasks and challenges. By understanding these architectures and their training styles, we gain deeper insights into Language Models and their transformative impact on a wide array of applications.