Introduction

In our journey througe realm of Large Language Models (LLMs), we’ve uncovered the foundational aspects of LLMs in “LLMs — A Brief Introduction”, explored their diverse architectures, and delved into their pre-training objectives in “LLMs — Model Architecture and Pre-training Objectives”. Now, it’s time to embark on a captivating expedition into the realm of prompt engineering and model configurations. Prompt engineering plays a pivotal role in shaping the output of LLMs, allowing us to harness their true potential and tailor responses to our needs.

In this article, we unveil the strategies behind prompt engineering, showcase the varied types of prompt techniques, and delve into the intricate dance between prompt engineering and model configurations to enhance LLM responses.

Prompt Engineering

Prompt engineering involves crafting input prompts that guide LLMs toward generating desired outputs. By providing clear and contextually rich instructions, we can leverage LLMs’ language generation capabilities to create tailored responses for a multitude of applications. Let’s delve into prompt engineering and understand different types of prompt strategies to enhance the LLM response.

Direct Instruction Prompts

Direct instruction prompts are explicit and straightforward, guiding the LLM to produce precise and specific answers or content. These prompts are ideal for scenarios where clarity and precision are paramount.

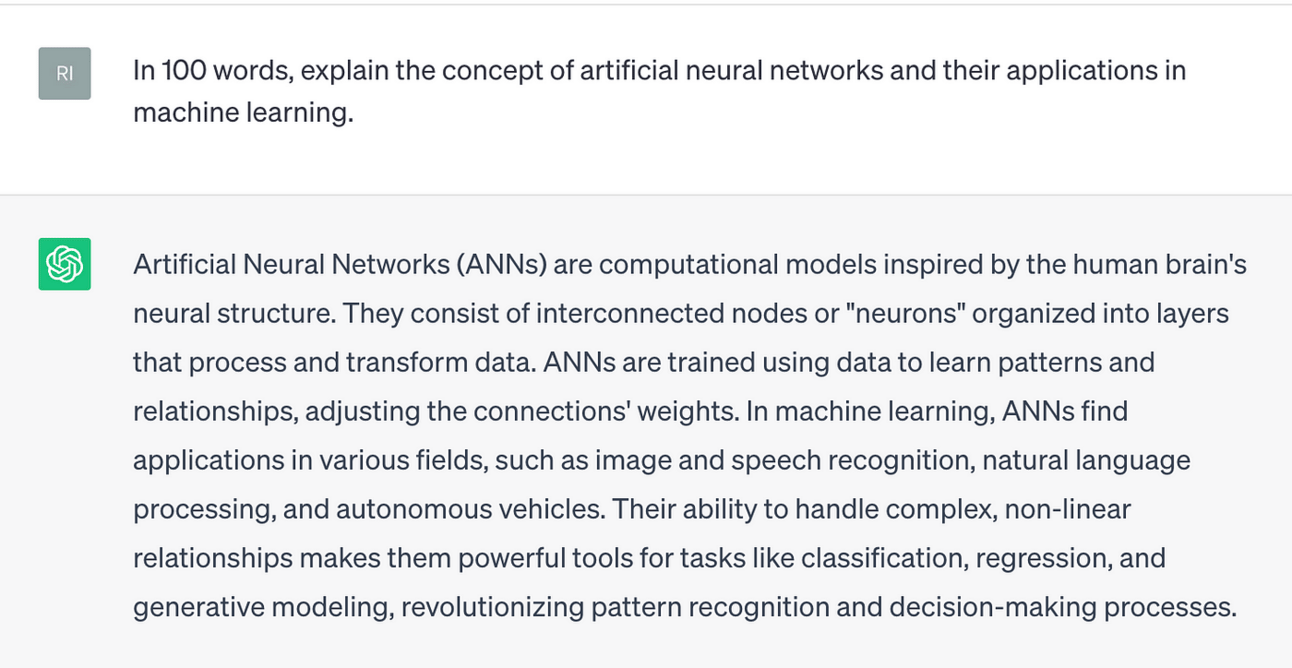

Example Prompt: “In 100 words, explain the concept of artificial neural networks and their applications in machine learning.”

ChatGPT response for above prompt

In this case, the prompt leaves no room for interpretation, explicitly instructing the LLM to provide a concise explanation of artificial neural networks and their role in machine learning.

Contextual Prompts

Contextual prompts set the stage by providing a scenario or context, allowing LLMs to generate responses that align with the provided background. They are particularly useful for generating narratives, dialogues, and creative content.

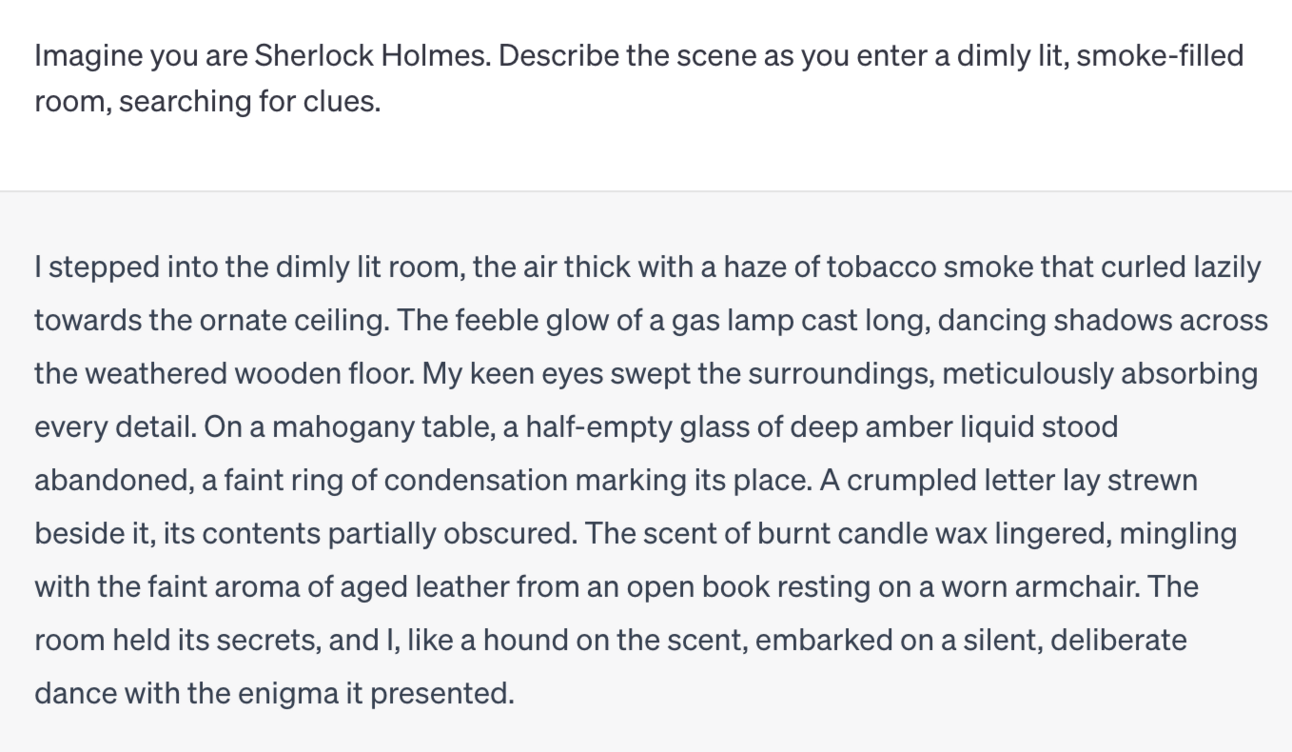

Example Prompt: “Imagine you are Sherlock Holmes. Describe the scene as you enter a dimly lit, smoke-filled room, searching for clues.”

ChatGPT generated response

By immersing the model in the role of a detective and setting the scene, the LLM is guided to generate a response that aligns with the provided context.

Systematic Variation Prompts

Systematic variation prompts involve tweaking the prompt to observe how the LLM’s responses change. This technique is invaluable for exploring the boundaries of the model’s knowledge and creativity.

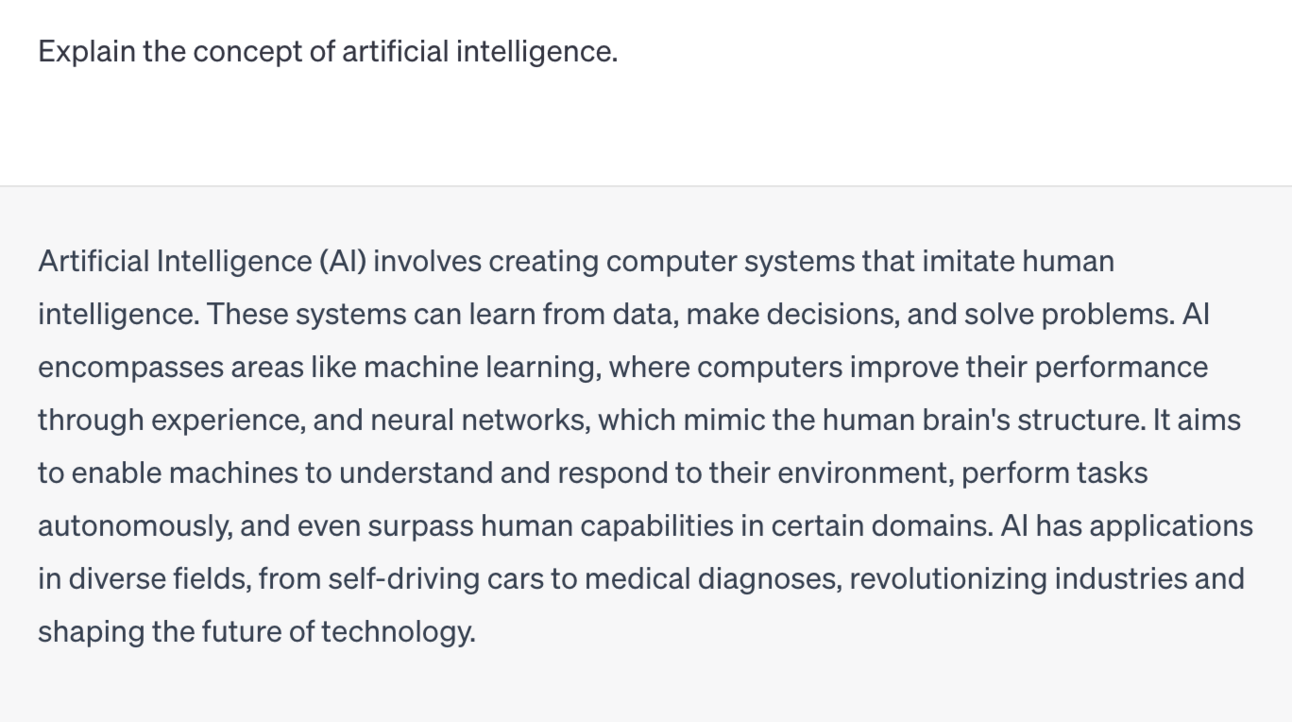

Example Prompt Variation 1: “Explain the concept of artificial intelligence

atGPT generated response

Example Prompt Variation 2: “Describe the idf artificial intelligence.”

Cha generated response

By systematically varying the prompt while maining the same underlying concept, you can gauge how the LLM’s responses change based on subtle alterations.

Inverse Prompts

Inverse prompts ask the LLM to generate the input itself, making them suitable for tasks like question generation and code completion.

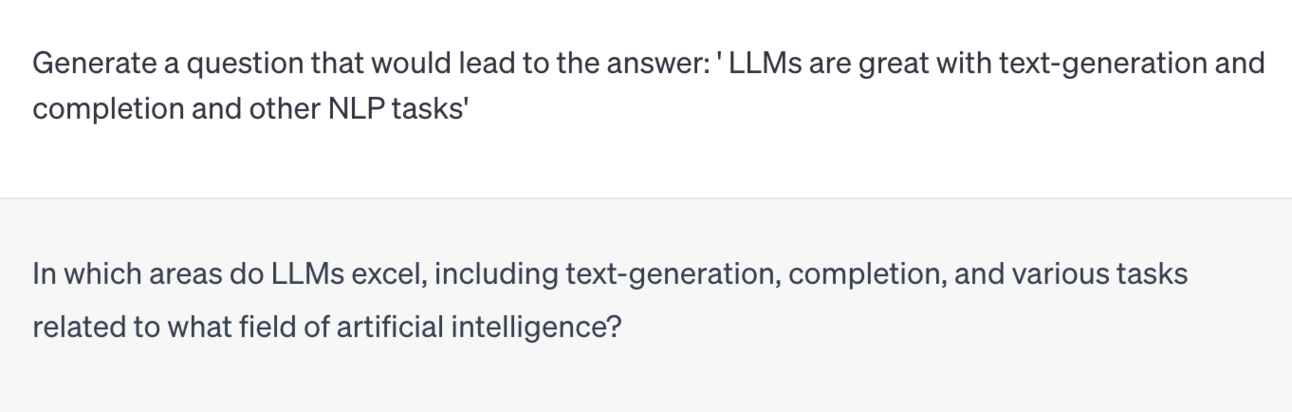

Example Prompt: Generate a question that would lead to the answer: ‘ LLMs are great with text-generation and completion and other NLP tasks’

ChatGPT generated response

In this case, the LLM is prompted to generate a question that corresponds to the provided answer, allowing it to exhibit its understanding of the relationship between questions and answers.

Bridging Prompts

Bridging prompts involve providing partial information and requiring the LLM to complete or bridge the gap. This strategy can help LLMs generate coherent text by leveraging the provided context.

Example Prompt: “Complete the following sentence: ‘In the realm of artificial intelligence, how large language model supporting …’”

Chatgenerated response

By offering a partial sentence, the LLM is guide generate a completion that fits seamlessly within the provided context.

Socratic Prompts

Socratic prompts emulate the Socratic method of questioning, where the LLM is guided through a series of questions to arrive at a reasoned conclusion. This strategy is effective for guiding the LLM to generate logical and analytical responses.

Example Prompt: “What are the primary factors that contribute to climate change? List them and explain the interrelationships between these factors.”

Cha generated response

By structuring the prompt as a series of questions, the is prompted to engage in a thoughtful analysis of the topic, generating a comprehensive response.

Mixed Context Prompts

Mixed context prompts involve combining multiple contexts or scenarios within the same prompt. This strategy can lead to creative and unexpected responses by challenging the LLM to integrate diverse elements.

Example Prompt: “Write a short story that combines elements of science fiction and medieval fantasy. Imagine a world where futuristic technology coexists with ancient castles and knights.”

Use the above to read complete response

By blending two distinct contexts, the LLM is encouraged to produce imaginative and unique narratives that draw from both genres.

Prompting Techniques

Prompting involves a spectrum of methods that induce context-sensitive behavior in Large Language Models (LLMs), enabling the generation of targeted outputs. Numerous techniques contribute to crafting prompts that enhance LLM responses. Within this segment, we are selecting a handful of the most widely acclaimed techniques.

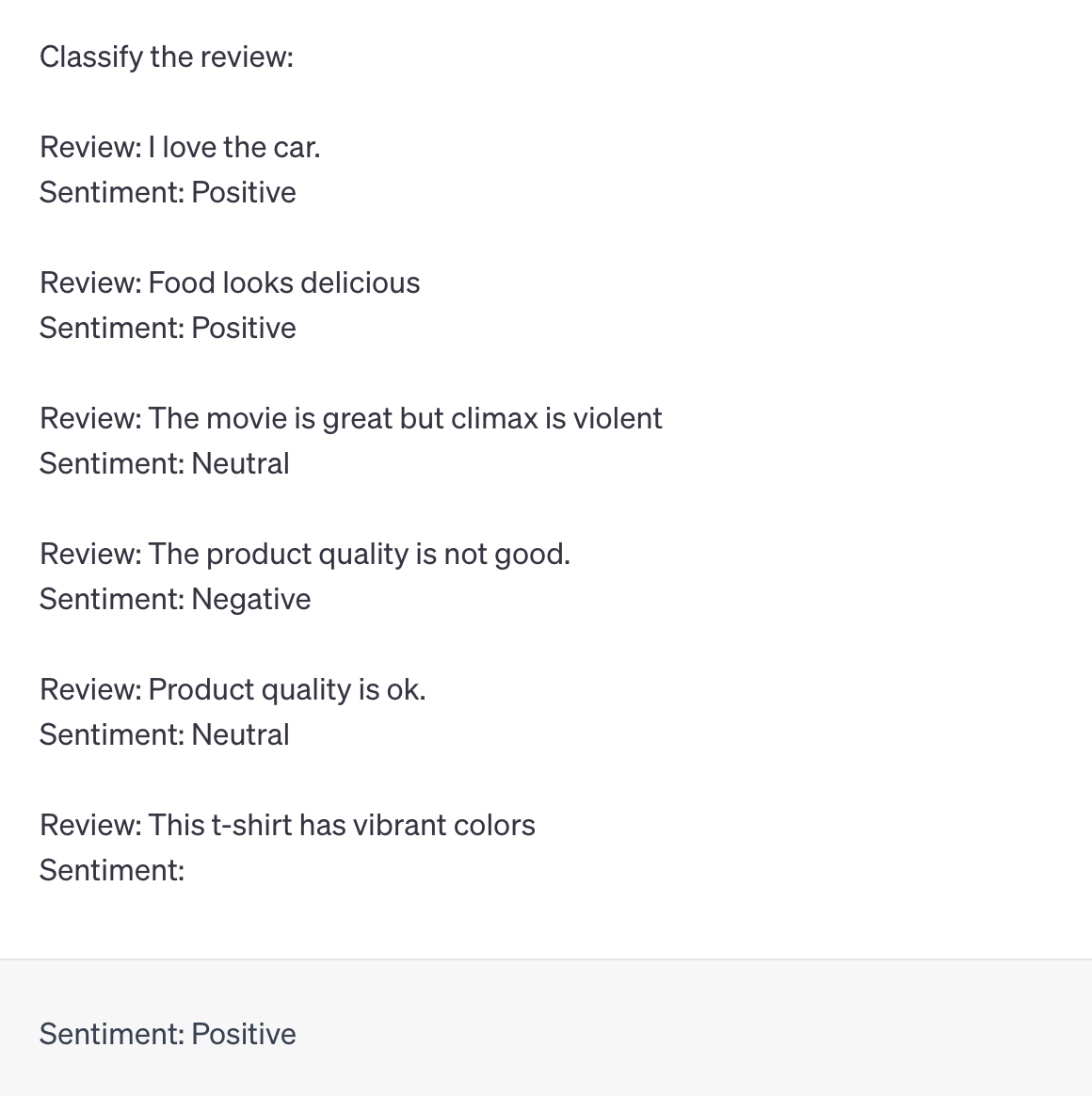

Zero-Shot Learning

In zero-shot learning, LLMs are provided with a prompt that describes the task but doesn’t provide any specific examples. The model is expected to generate a response based solely on the given instructions.

One-Shoarning

One-shot learning extends zero-shot learning by providing one exampleinput and output. The example serves as a guide for the model to understand the task and generate relevant responses.

Feoarning

Few-shot learning extends one-shot learning by providing a small number (i.e. samples) of example inputs and outputs. These examples provide a strong understanding of the task and generate controlled and relevant responses.

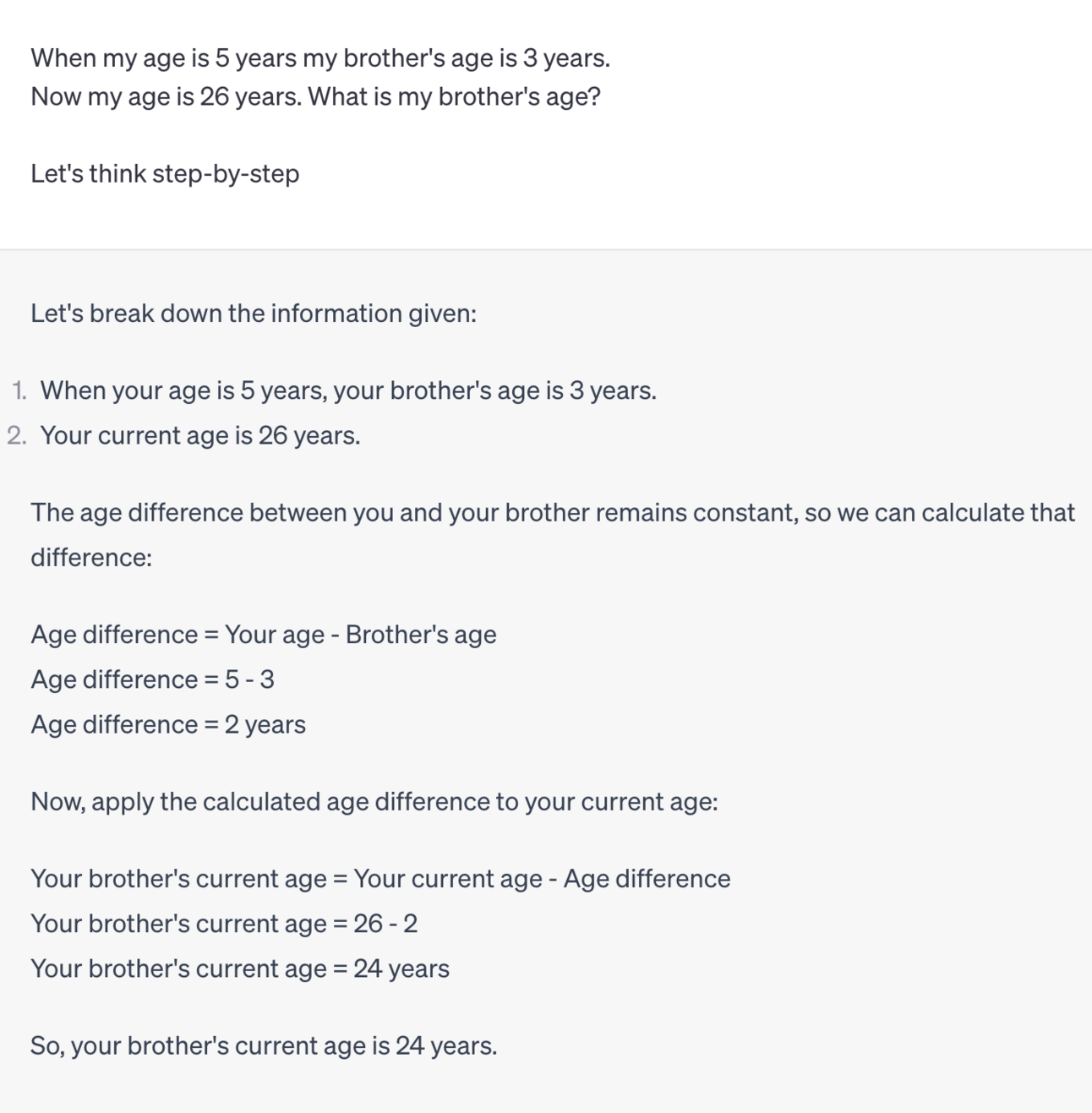

Chain hts

This approach involves providing a series of prompts or questionst create a logical progression of thought. The LLM generates responses that build upon previous prompts, simulating a conversation orti-step process.

Model Configioet’s dive into the explanations of the model configuration parameters: max_tok temperature, top_k, and top_p. These parameters play a significant role in shaping the behavior and output of Large Language Models (LLMs).

Max Tokens: It is a parameter that limits the length of the generated response to a certain number of tokens. Tokens are the fundamental units of text in language models, which can represent words, subwords, or characters. Setting

max_tokensrestricts the length of the output text, ensuring that the generated response remains within the specified token limit.

For example, if you setmax_tokensto 50, the LLM will generate a response that contains a maximum of 50 tokens, even if the input prompt suggests a longer response.Temperature: It controls the randomness of the LLM's output. It adjusts the distribution of probabilities for generating the next token. A higher temperature makes the output more diverse and creative, as it increases the likelihood of selecting less probable tokens. Conversely, a lower temperature makes the output more focused and deterministic, favoring highly probable tokens.

- High Temperature (e.g., 0.8): Generates more random and diverse outputs.

- Low Temperature (e.g., 0.2): Generates more deterministic and focused outputs.Top-k Sampling: It sampling limits the choices for the next token to the top-k most probable tokens according to their probabilities. This technique helps maintain coherence and relevance in the generated text by only considering a subset of the most likely tokens.

For instance, if you settop_kto 50, the LLM will only consider the top 50 most likely tokens for the next step, ensuring that the generated response remains contextually relevant.Top-p Sampling (Nucleus Sampling):

Top-psampling, also known as nucleus sampling, keeps generating tokens until the cumulative probability surpasses a certain thresholdp. This approach ensures that the generated output is contextually rich while still allowing for some diversity.

For example, if you settop_pto 0.9, the LLM will continue selecting tokens until the cumulative probability of the selected tokens reaches 90%, maintaining both relevance and diversity.

Conclusion: Orchestrating LLM Magic Through Prompt Engineering

In the captivating realm of Large Language Models, prompt engineering stands as an artistic endeavor, unleashing the boundless potential of LLMs. Paired with model configurations, this technique empowers us to mold responses that mirror our intentions flawlessly. As we venture deeper into the LLM landscape, our next exploration will demystify model configurations — revealing the cryptic influence of temperature, top-k, top-p, and other pivotal parameters that shape these exceptional language models. Await our next chapter, where you’ll gain mastery over the nuanced orchestration of LLM behavior. Until then, continue to experiment, innovate, and savor the captivating journey through the realm of LLMs.